DESIGN FOR TESTABILITY

What is DFT?

DFT means design for testability. During the design process, DFT uses test features to detect manufacturing defects during production. This method allows controllability and observation of the chip’s internal nodes during production. It facilitates the design to be faulty or fault free. Reduces test costs and improve test efficiency

Why DFT?

In addition to reducing the debug time and increasing the test quality of chips, DFT provides a structured way of detesting manufacturing defects.

What is functional testing?

In functional testing, all possible test patterns are generated for testing a design’s functionality.

Disadvantages:

- Functional testing is a time-taking process

Example if a design has n inputs it requires the 2^n patterns in functional testing

- Increase test time and cost

- Increase test memory

Note :- By using design for testability (DFT), functional testing can overcome its disadvantages

DFT testing strategies

As chip sizes decrease, manufacturing processes may play a significant role in chip defects. There is a need to test chips for manufacturing defects cost effectively.

DFT testing strategies are of two types

- Ad-hoc testing

- Structural testing

Ad-hoc testing:

An adhoc test is a manual test that replaces bad design practices with good ones.

It doesn’t have proper documentation. It doesn’t have major design changes

Some major changes in adhoc testing:

- Reduce the combination of logic

- Reduce redundant logic

- Avoid synchronous and asynchronous resets

- Partitioning the large design into small design blocks

Disadvantages:

- Adhoc testing is a time-taking process

- Skilled DFT engineers are required.

- There is no guarantee that high fault coverage is achieved when tests are generated manually

Note: To overcome the disadvantages of adhoc testing, structural testing is required.

Structural testing:

The purpose of structural testing is to select test patterns based on the structure of the circuit and a set of fault models. It verifies the correctness of the circuit structure, but not the functionality of the whole design.

Advantages:

- Improve design coverage

- Improve test efficiency

- Decrease test time

- Decrease test cost

Structural testing types:

- SCAN

- SCAN compression

- JTAG/Boundary SCAN

- BIST

- MBIST

- LBIST

Basics:

Defect:

The defect is a physical imperfection or flaw that occurs during the design process.

Fault:

A logical representation and modeling of that defect is called a fault.

Failure:

Failure is a deviation of circuit performance

Error:

An error is a wrong output signal produced by a defective circuit

Controllability:

Ability to place or shift known values on the net is called controllability

Observability:

Ability to observe the net or node of a gate after driving to a known logic value is called observability.

Fault equivalence:

One test pattern that detects multiple faults is called fault equivalence. The output function represented with f1 is same as output function represented by circuit with f2. The f1&f2 is called fault equivalence

Fault collation:

A fault collection is the process of detecting two types of stuck at faults in the same design

Fault dominance:

All tests are stuck at fault f1 and detect fault f2, then f2 dominates f1. If f2 dominates f1, f2 can be removed and only f1 is retained. Then is called fault dominant.

DFT flow :

- DFT flow has four stages

- SCAN

- SCAN compression

- ATPG

- Simulation

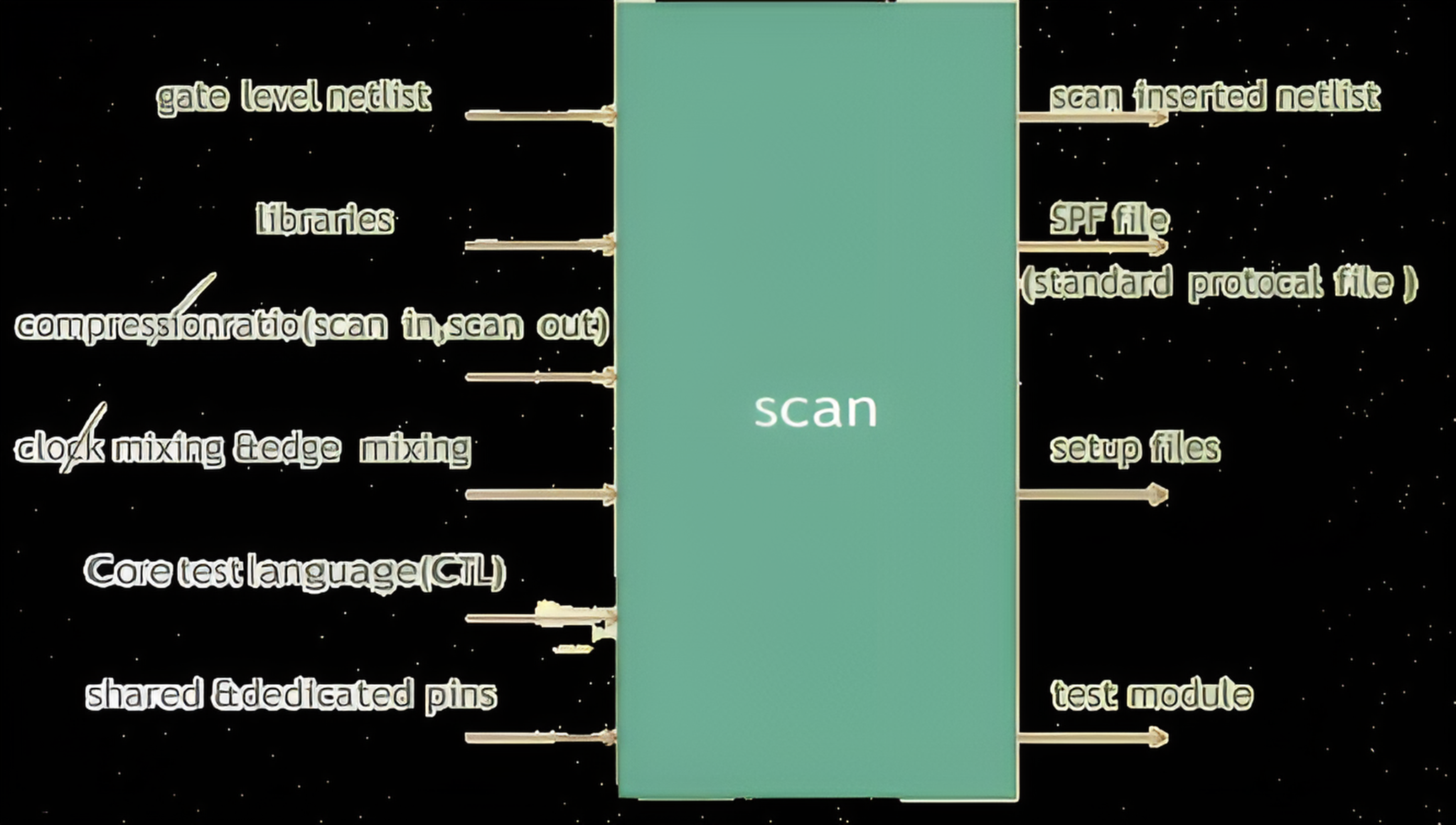

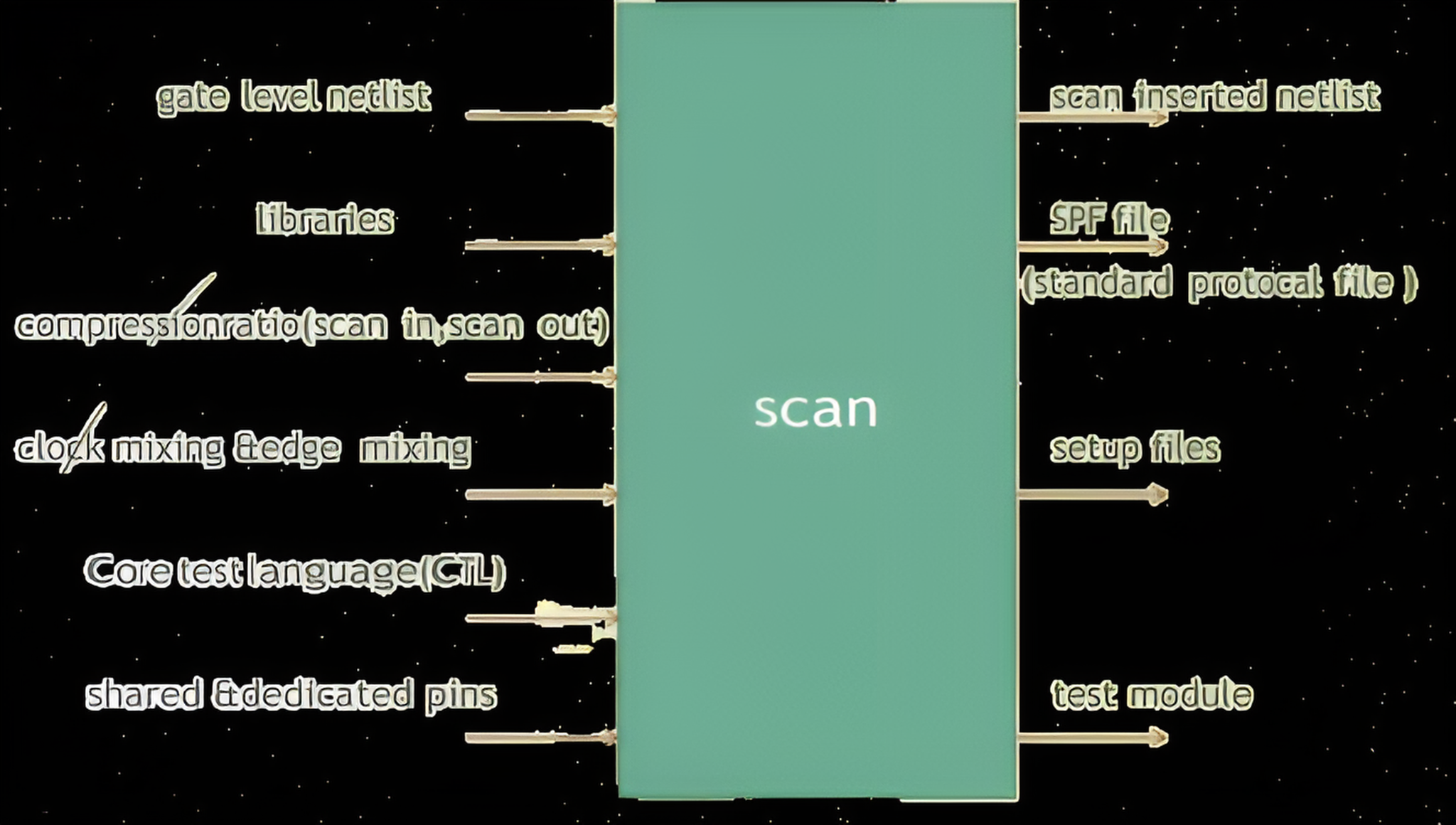

SCAN

Need of SCAN:

- In a small and purely combinational circuit it is easy to control the internal nodes from PI’s and observe the response at PO’s.

- Today’s designs tend to be complex and contain sequential cells.

- The internal nodes will be fed by flip-flops which are logically far from the primary inputs and outputs. This makes internal nodes difficult to test.

Scan helps us apply stimulus to internal nodes by leading the flip-flops feeding them and propagating the captured value in scan cells through shift register mode.

SCAN Principle

First convert the normal flop into a scan flop using a multiplexer.

Serial scan shift registers are used to apply the test patterns directly to the internal design. Apply the capture clock and capture the internal logic’s response into the scan cells. The captured values are shifted out through the scan out.

SCAN Operation:

![]()

Scan inputs & outputs

SCAN:

Advantages:

- Easy to get controllability and observation on each and every node

- Make a complete design for the shift register

- Improve test efficiency

- Easy to generate scan design patterns

- Increase coverage

Disadvantages:

- Area over ahead

- Power consumption increase

- Timing issues are coming

- Routing congestion increases.

SCAN Cells:

Based on the signal which provides scan shift capability there are three types of scan styles

- Muxed scan cell

- Clocked scan cell

- LSSD scan cell

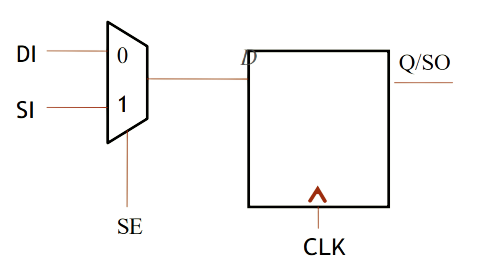

Multiplexer scan cells:

This scan cell is composed of a D flip-flop and a multiplexer. The multiplexer uses a scan enable (SE) input to select between the data input (DI) and the scan input (SI). In normal mode, SE is set to 0. In shift mode, SE is set to 1

Advantages:

- It requires only one clock

- Simple design

Disadvantages:

- The area of the design increases.

- Add a multiplexer delay to the functional path

Clocked scan cells:

It is similar to a multiplexed scan cell but the difference is it uses two clocks for shifting and capturing. It uses a dedicated edge-triggered test clock to provide scan and shift capability.

- Data clock/functional clock

- Scan clock/shift clock/test clock

Advantages:

- Dedicated clocks are used for capturing and shifting

- Results in no performance degradations

Disadvantages:

- It requires an additional shift clock

- Requires additional routing

LSSD SCAN Cell:

Scan replaces edge-triggered flip-flops with LSSD-compatible flip-flop cells that use standard LSSD master-slave test clocks for scan shift.

Advantages:

- Insert scan into latch-based design

- It is guaranteed to be a race-free condition

- Timing errors free design

Disadvantages:

- This technique requires additional routing for the additional clocks, which increases the routing complexity

- Difficult to set clock signals

SCAN Types:

Based on testing requirements, scans are divided into three types

Full scan

Partial scan

Partition scan

Full scan design:

In the full scan design all storage elements are converted into scan cells.

Advantages:

- Highly automated process

- High fault coverage

- Highly effective

Disadvantages:

- Area is overhead

- May be timing issues

Scan types:

Partial scans:

The partial-scan design only requires that a subset of storage elements be replaced with scan cells and connected into scan chains.

Advantages:

- Reduce the impact on the area.

- Reduce timing impact

Disadvantages:

- Low test coverage

Partition scan design:

Partition scan designs are divided into some partitions. Then these partitions are converted into scan chains.

Advantages:

- Improve test coverage and run time

- Less power consumption

- High test coverage

Disadvantages:

- Need to check the interconnections between two blocks.

Note :- Wepper cells may be required for checking interconnections between two blocks

SCAN design rules:

SCAN design rules also called SCAN DRC’S

In order to implement scan into a design, the design must comply with scan design rules. They limit the coverage of faults. Scan design rules labeled “avoid” must be fixed throughout shift and capture operations.

Major SCAN DRC’C:

- All internal clocks must be controllable from the top level

- Set and reset signals must be controllable from the top level

- Avoid the combinational feedbacks in the design

- If any latch in the design needs to convert into transparent latch

- Need not be converted the existing shift registers into scan flops

- Bypass memory logic if memory elements are in the design.

- Avoid the multicycle paths as much as possible

- There is no minimum coupling between clock and data

Scan DRC’s:

Scan-enabled signals must be buffered

Edge mixing

If the design of positive edge flop follows negative edge flop data mismatches are occurring, these data mismatches are avoided by two methods:

- Design must be negative edge flop follows the positive edge

- Use lockup latch between positive edge flop followed by negative edge flop

Scan balancing:

The scan vector length will depend on the longest design chain. The short vector must be x-filled at the design then results in wastage in tester memory. Overcome these two by using scan balancing. In scan balancing all scan chains must be unique lengths.

![]()

![]()

Scan compression:

Compression is a technique to reduce test time and cost and test memory of the design.

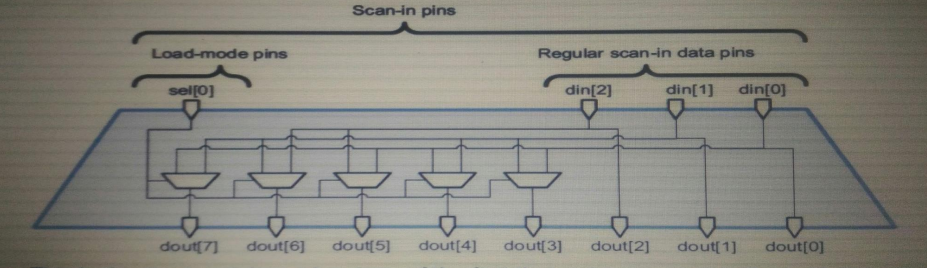

Compression is change the existing long scan chain in between scan in’s and scan out’s are broken up into small scan chains (or) increase scan chains without increase the scan channels

The length of scan chains decreases without increasing the scan ports.

Advantages:

- Reduce test cost

- Reduce test time

- May reduce test memory/data

Disadvantages:

- Area is over head

Scan compression architecture:

Scan compression main blocks:

- Decompressor

- Compressor

- Masking logic

Compression block diagram:

Decompressor:

The decompressor can be composed of a broadcast or spreader network with multiplexer logic for scan input pins.The decompressor is broadcasting input patterns of scan channels to the internal scan chain.The internal logic is multiplexer logic connected randomly. For each shift cycle, ATPG can choose load-mode and scan data values that steer these required values into the compressed scan chain.

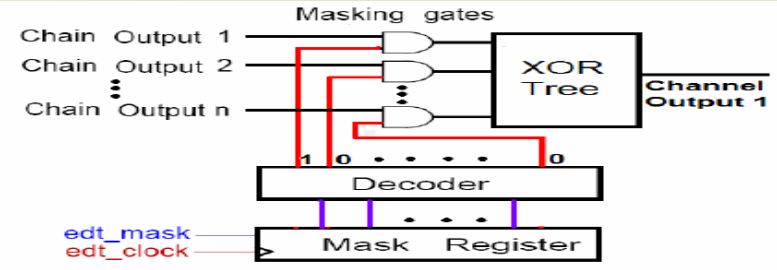

Compressor:

The compressor compresses the number of scan chain data fed to scan channels using the XOR logic.

The compressor is XOR logic.

Masking logic:

If Design contains x-source logic which can drive the x-values to scan chain output.

For these X-source logic effects, the test coverage decreases. Need to mask X-source logic by using masking logic.

The mask logic is to mask some errors

- X-masking

- Aliasing effect

Masking logic

X-masking:

- If any internal scan chain logic can drive x-value to the xor logic. The xor logic always drives x-value at output of scan channel.

- By using x-masking we can mask that particular scan chain logic by using AND gate logic

Aliasing effect:

- The output of scan chain is giving the with fault and without fault same value then this effect is called aliasing effect.

- These effects can be eliminated by using masking AND logic.

Compression ratio:

The compression ratio gives how many scan channels and internal scan chains are in the design. The compression ratio is defined as the ratio of internal scan chains to scan channels.

Example:

No.of flops in design:64800,

No.of scan channels:6

Internal scan chains:360

The compression ratio is=360/6=60x

Note: the minimum compression ratio is “3” in the synopsis.

Compression with bypass:

In scan compression mode if there is any fault inside the decompressor or compressor logic the output indicates faulty circuit.By using compression bypass technique we can find if compressor or decompressor inside logic is correct or not.

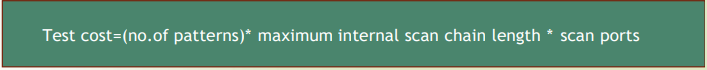

Formulas:

Test time:

Test time is defined as the time taken to test the scan chain.

Test depends on the long scan chain in the design

Test cost:

The test cost is proposed to test time

Advanced compression technique:

Adaptive scan

- We can add source logic to adaptive scan. It reduces scan pin count.

- Selective scan channels

Scan compression with adaptive compression:

Adaptive compression:

Main blocks of advanced compression:

Serializer:

- It takes the data from the compressor in parallel and converts it to serially

Desterilizer:

- Data/input patterns from ATE are converted to parallel and fed to the decompressor as serial data.

Advantages:

- Selective scan channels

- Limited scan ports

- It can be used as a normal compression method. Based on the test control signals

ATPG:

ATPG stands for automatic test pattern generation.The procedure involves the generation of input patterns that ascertain presence or absence of faults at some location in a circuit.For detection of faults in design we generate patterns is called test pattern generation. If it is automated through a DFT tool is called automatic test pattern generation.

The test patterns generated are of three types:

- Exhaustive test pattern generation

- Random test pattern generation

- Determined test pattern generation

Exhaustive test pattern generation:

The exhaustive test pattern generation technique implies applying all input combinations and checking for functional correctness of the design. This test pattern generation not used in ATPG pattern generation because of complexity.

Disadvantages:

- It is not necessary to conduct exhaustive testing in ATPG

- It takes time to process

- If the design has n variables, it is possible to generate the 2^n patterns.

Random pattern generation

Random pattern generation uses the LFSR technique for generating random patterns.LFSR stands for linear feedback shift register.

LFSR:

Random pattern generation involves three steps

Generate a random pattern

Determine how many faults are detected by the random pattern

Continue above two steps till no new faults are detected by random pattern

Deterministic ATPG

Deterministic test pattern generation involves sensitization, propagation, and justification of a particular fault in the design.

Deterministic ATPG are two types:

- Path sensitization

- Symbolic difference

Symbolic differences:

- Symbolic difference is not used widely.

- Atpg by symbolic difference is based on the well-known Shannon expression.

- Mostly preferred path sensitization technique.

Path sensitization

The road to sensitization is mainly based on the sensitization/activation, propagation, and justification approach. It is generally used to test faults that are difficult to test. It works using a D-algorithm.

Fault sensitization/activation:

- Applying the opposite value to the network fault will activate the fault.

Propagation:

- Path is selected from the fault site to some primary output

- Fault effect can be observed for this detection.

Justification:

The fault justification is done output to input.to check which net the fault value is coming and check the output is faulty or fault free.

Fault models:

Fault models are things that go wrong in construction or design defects. A successful fault model should satisfy two criteria.It should reflect the behavior of the defect.It should be computationally efficient in fault simulation and test pattern generation.

There are different fault models

- Stuck at fault model

- Bridging the fault model

- Delay fault model

- IDDQ fault model

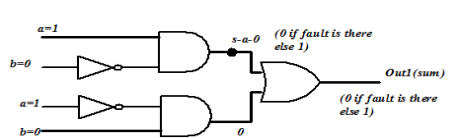

Stuck at fault model:

The stuck fault model is effecting the state of logic signals on a line in a logic circuit including primary inputs and primary outputs.

It appears to be stuck at a constant logic value. for fault models:

Stuck at – 0 fault

It drives the constant logic value of 0 at the net output.

Stuck at – 1fault

It drives the constant logic 1, which is at the net output.

Bridging fault model:

A short between two elements is commonly called a bridging fault

Two types of bridge fault models exist:

Wired AND bridging

The signal net formed by two shorted lines will always output logic “0”.

Wired-OR bridging

The signal net formed by two shorted lines will takes on logic “1” the output always logic “1” .

Delay fault model:

A delay fault causes excessive delay along a path such that the total propagation delay falls outside the specified limit

Delay fault models are of two types:

- Transition delay fault

A delay fault occurs when the time interval taken for a transition from the gate input to its output exceeds its specified range called transition delay.

- Path delay fault

A delay fault which considers the cumulative propagation delay along a signal path through the circuit under test

IDDQ fault model:

A “stuck short” fault model is also known as the IDDQ fault model.It will produce a conducting path between Vdd and Vss. It will create higher power consumption on the network in the design.

This technique of monitoring steady state power supply current to detect transistor stuck short fault is referred to as IDDQ testing.

Fault classes:

Faults are classified into fault classes based on how they were detected and why they could not be detected.

Fault classes are two types:

- Untestable

- Testable

Testable (TE)

Testable faults are all those faults that cannot be proven as untestable The testable faults are classified

Detected (DT)

Include all faults that the ATPG process identifies as detected

- Det-simulation

- Det-implification

Posdet (PD)

Include all faults the fault simulation identifies the as possible detected but not hard detected

- Posdet-testable(PT)

- Posdet-untestable(PU)

Atpg –untestable (AU)

- Atpg-untestable fault class includes all faults when the test generator cannot find a pattern to create a test.

- Testable becomes untestable due to ATPG limitations.

Undetected (UD)

The undetectable faults class includes undetected faults that cannot be proven untestable or atpg untestable

- Un-controllable (UC)

- Un-observable (UO)

Untestable (UT):

Untestable faults are faults for which no pattern can be used to detect or possible- detected them. It cannot cause functional failures so the tool can exclude The untestable faults are classified as:

- Unused fault (UU)

Including all faults on circuitry unconnected circuit observation points and floating primary outputs

- Tied fault (TI)

Including all faults in circuitry where the fault point is tied to a particular value.

- Blocked faults (BL)

Including all faults in circuitry for which tied logic blocks all paths on an observable point

- Redundant faults (RE)

Including all faults on circuitry the test generator considers untestable faults under any condition

SAF(Stuck at faults):

SAF means stuck at fault. The SAF is used to control the state of logic signals on a line in a logic circuit including primary inputs and primary outputs.

It appears to be stuck at a constant logic value. Stuck at fault model types:

Stuck at – 0 fault

It drives the constant logic 0 value at the net output.

Stuck at – 1fault

It drives the constant logic 1, – at the net output.

TDF(transition delay fault)

Transition on that particular node does not produce expected results at maximum operation speed is called TDF.

TDF are classified into two types

Slow to rise

Slow to fall

Slow to rise:

when the circuit is maximum frequency of operation it is unable to produce the transition from o to 1 at a particular node is called “slow to rise”

Slow to fall:

when the circuit is maximum frequency of operation it is unable to produce the transition from 1 to 0 at a particular node is called “slow to fall”

Transition delay test approach:

In TDF test approach we generate initialized vector and launch vector. Based on launch vector generation test categorized into two types

Launch on capture (LOC)

- Launch the launch value on the capture path

Disadvantages:

- The coverage in loc is lower because some controllability is missing in some cases.

Launch on shift (LOS)

Launch the launch value on the shift path

Launched on an extra shift (LOES)

It uses the pipelined architecture as a means of avoiding limitations on loss.

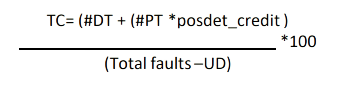

Test coverage & fault coverage

- Test coverage

Test coverage, which is a measure of test quality, is the percentage of faults detected among all testable faults.

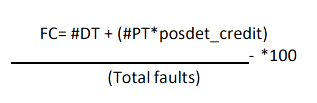

- Fault coverage

Inputs & output of ATPG:

ATPG DRC rules:

In order to implement ATPG pattern generation into a design, must comply with a set of design rules. As they limit fault coverage. We need to avoid those rules.

ATPG DRC’S:

- Clock rule checking

- Data rule checking

- Scan rule checking

- Compression rule tracing

- Path delay rules

Atpg commands

![]()

![]()

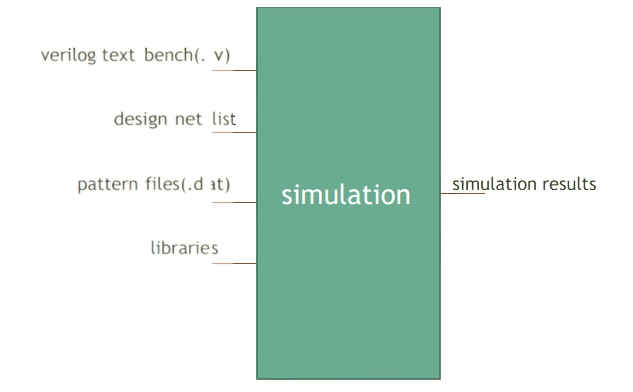

Simulation:

Using simulation, complex waveforms can be built in a very short amount of time. In dft simulation we run the patterns on the design and validate the design which are correct or not based on waveforms which compare actual patterns i.e text bench patterns with simulation values and then give the response. When those match, the simulation passes, otherwise a design violation occurs.

Circuit simulation:

Imitative representation of circuit functioning by means of another alternative, a computer program, which is called simulation.

Fault simulation:

Simulating a circuit’s fault is called fault simulation

- Serial fault simulation

- Parallel fault simulation

Simulation types:

Simulation are two types

- Serial simulation

- Parallel simulation

Before forming simulation first check the design setup i.e chain test

Chain test:

In chain test to check all the scan chain is properly or not and to check the chain connectivity and nets connectivity.To verify the all the patterns are passing or not.

Serial simulations:

In serial simulation, the test pattern is given one by one and then analyzed for output response of the circuit.

Parallel simulations:

In parallel simulation the patterns applied parallel to scan chain and take the output response.It takes the response at only one clock response.

The simulation is based two approaches

- Zero delay simulation/no timing simulation

- With timing simulation

Zero delay simulation:

Zero delay simulation means without considering the timing to perform the simulation To check the circuit connectivity.Netlist Datapatterns

With timing simulations:

With timing simulation means with considering the timing on the path and then simulate patterns take the response

The timing simulation are done two methods

SDF min

It considers the minimum timing case i.e. appropriate timing case

SDF max

It considers the maximum timing case i.e. a poor timing case.

Why do we do simulation once we have done ATPG?

ATPG and simulation libraries are different. ATPG libraries would provide functionality of a cell but simulation libraries would consist of different timing checks. These timing checks should be done for each and every design before it passes to ATE for testing. ATE supports only simulation libraries because they are meant for real life scenarios. Whereas ATPG libraries can’t be used for timing checks.ATPG tool can’t understand libraries with timing checks.So, because of library mismatches, we do ATPG and simulation to ensure the design is fit for real time.

Simulation mismatches:

Test pattern verification based on device timing information to ensure patterns can be applied to real circuits. If there are any mismatches between the timing based simulation results and the expected results, the pattern must be corrected. If the test pattern is not corrected, the good devices will be rejected during the manufacturing process.

The most common simulation mismatches:

- Timing issues

- Library mismatches.

- Design rule violations

- Clock rule violation

- Bidirectional inputs/outputs

Simulation commands

![]()

JTAG

JTAG means joint test action group

It is IEEE-1149.1 standard integrated method of testing PCB interconnections that implemented by integrated circuits.

A single data register is connected between TDI and TDO at a time.

JTAG builds test points into chips and tests compatibility between them.

The main components of JTAG are as follows:

- TAP controller

- Data register

- Instruction register

Architecture of JTAG:

Test data registers:

- Instruction register

- Bypass register

- Boundary register

- Identification register*

- User-defined registers

Boundary scan cells:

Boundary scan is a method for testing the interconnections of PCB. Boundary scan is used for debugging in order to monitor integrated circuit pin stage. Using in-test and out-test, measure the voltage.

Operating mode:

- Normal mode

- Scan mode

- Capture mode

- Update mode

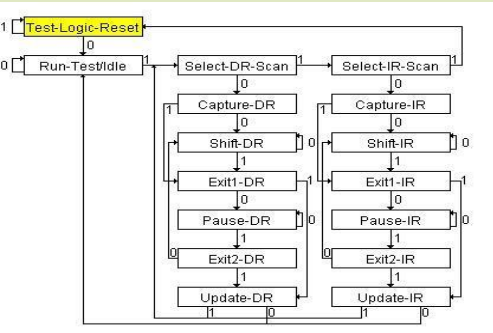

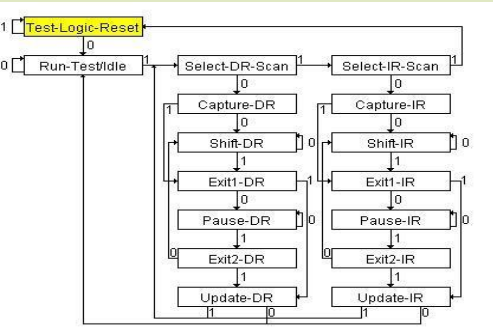

TAP controller

The TAP controller mainly works on 16 bit state machine

All state transitions of the tap controller is based on TMS and TCLK signals. The TAP controller connections

- TCLK

- TMS

- TDI

- TDO

Instruction register:

The instruction register allows instructions to be shifted into the design. The instruction register selects the test to be performed and instructs which data register is accessed.

Instructions for tests are classified into two types

- Mandatory instructions

- Optional instructions

Mandatory instructions:

These mandatory instructions are always used in JTAG. These instructions play the main role in interconnection checking

- ex-test

- Bypass

- Sample

- Preload

Optional instructions:

Mainly the optional instructions are used for some special testing purpose

These optional instructions are not required if we need to test the special tests then use these optional instructions.

The optional instructions

- Intest

- Runbist

- Highz

- Usercode

- Clamp

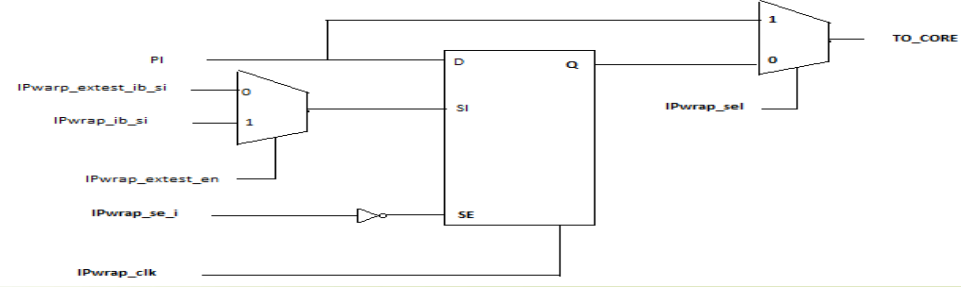

Wrapper cell:

The wrapper cell is P1500 standard integrated method of testing block level interconnections

The use of wrappers for blocks to get control at block level. There are two types of wrapper chains.

- Input wrapper chain

- Output wrapper chain

Input wrapper chain:

Output wrapper chain:

Operating modes:

- In bound test

It is a internal core test

- Out bound test

It is a toptest

Types of wrapper cells:

Wrapper chains consist of two types of wrapper cells:

- Dedicated wrapper cell

- Shared wrapper cell

Dedicated wrapper cell

A new cell is added into the design for test-purpose and it controls and observes the input and output.

Disadvantage:

- Area overhead

- Additional delay is added

Shared wrapper cell

To isolate input and output for testing purposes, an existing functional cell is reused.

Disadvantages:

- The tool requires additional analysis to identify flops. Identification can become complex based on the fan-out.

Important definitions:

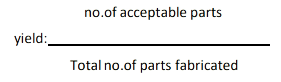

Yield : The yield of a manufacturing process is defined as the percentage of acceptable parts among all parts fabricated.

DPPM: DPPM means defective parts per million In total manufacturing parts, few defective parts are present.

Rejection ratio:

Only a few parts escape testing. The ratio of escaped faults to tested parts

ICG:

ICG means internal clock gating. It is mainly used for power saving in design . It disables a particular portion of the design if it is not used at that time

Advantages:

Power saving technique

Disadvantages:

- Additional areas are overhead

- Extra effort is required

- You need to careful design of gate circuitry

DESIGN FOR TESTABILITY

What is DFT?

DFT means design for testability. During the design process, DFT uses test features to detect manufacturing defects during production. This method allows controllability and observation of the chip’s internal nodes during production. It facilitates the design to be faulty or fault free. Reduces test costs and improve test efficiency

Why DFT?

In addition to reducing the debug time and increasing the test quality of chips, DFT provides a structured way of detesting manufacturing defects.

What is functional testing?

In functional testing, all possible test patterns are generated for testing a design’s functionality.

Disadvantages:

- Functional testing is a time-taking process

Example if a design has n inputs it requires the 2^n patterns in functional testing

- Increase test time and cost

- Increase test memory

Note :- By using design for testability (DFT), functional testing can overcome its disadvantages

DFT testing strategies

As chip sizes decrease, manufacturing processes may play a significant role in chip defects. There is a need to test chips for manufacturing defects cost effectively.

DFT testing strategies are of two types

- Ad-hoc testing

- Structural testing

Ad-hoc testing:

An adhoc test is a manual test that replaces bad design practices with good ones.

It doesn’t have proper documentation. It doesn’t have major design changes

Some major changes in adhoc testing:

- Reduce the combination of logic

- Reduce redundant logic

- Avoid synchronous and asynchronous resets

- Partitioning the large design into small design blocks

Disadvantages:

- Adhoc testing is a time-taking process

- Skilled DFT engineers are required.

- There is no guarantee that high fault coverage is achieved when tests are generated manually

Note: To overcome the disadvantages of adhoc testing, structural testing is required.

Structural testing:

The purpose of structural testing is to select test patterns based on the structure of the circuit and a set of fault models. It verifies the correctness of the circuit structure, but not the functionality of the whole design.

Advantages:

- Improve design coverage

- Improve test efficiency

- Decrease test time

- Decrease test cost

Structural testing types:

- SCAN

- SCAN compression

- JTAG/Boundary SCAN

- BIST

- MBIST

- LBIST

Basics:

Defect:

The defect is a physical imperfection or flaw that occurs during the design process.

Fault:

A logical representation and modeling of that defect is called a fault.

Failure:

Failure is a deviation of circuit performance

Error:

An error is a wrong output signal produced by a defective circuit

Controllability:

Ability to place or shift known values on the net is called controllability

Observability:

Ability to observe the net or node of a gate after driving to a known logic value is called observability.

Fault equivalence:

One test pattern that detects multiple faults is called fault equivalence. The output function represented with f1 is same as output function represented by circuit with f2. The f1&f2 is called fault equivalence

Fault collation:

A fault collection is the process of detecting two types of stuck at faults in the same design

Fault dominance:

All tests are stuck at fault f1 and detect fault f2, then f2 dominates f1. If f2 dominates f1, f2 can be removed and only f1 is retained. Then is called fault dominant.

DFT flow :

- DFT flow has four stages

- SCAN

- SCAN compression

- ATPG

- Simulation

SCAN

Need of SCAN:

- In a small and purely combinational circuit it is easy to control the internal nodes from PI’s and observe the response at PO’s.

- Today’s designs tend to be complex and contain sequential cells.

- The internal nodes will be fed by flip-flops which are logically far from the primary inputs and outputs. This makes internal nodes difficult to test.

Scan helps us apply stimulus to internal nodes by leading the flip-flops feeding them and propagating the captured value in scan cells through shift register mode.

SCAN Principle

First convert the normal flop into a scan flop using a multiplexer.

Serial scan shift registers are used to apply the test patterns directly to the internal design. Apply the capture clock and capture the internal logic’s response into the scan cells. The captured values are shifted out through the scan out.

SCAN Operation:

![]()

Scan inputs & outputs

SCAN:

Advantages:

- Easy to get controllability and observation on each and every node

- Make a complete design for the shift register

- Improve test efficiency

- Easy to generate scan design patterns

- Increase coverage

Disadvantages:

- Area over ahead

- Power consumption increase

- Timing issues are coming

- Routing congestion increases.

SCAN Cells:

Based on the signal which provides scan shift capability there are three types of scan styles

- Muxed scan cell

- Clocked scan cell

- LSSD scan cell

Multiplexer scan cells:

This scan cell is composed of a D flip-flop and a multiplexer. The multiplexer uses a scan enable (SE) input to select between the data input (DI) and the scan input (SI). In normal mode, SE is set to 0. In shift mode, SE is set to 1

Advantages:

- It requires only one clock

- Simple design

Disadvantages:

- The area of the design increases.

- Add a multiplexer delay to the functional path

Clocked scan cells:

It is similar to a multiplexed scan cell but the difference is it uses two clocks for shifting and capturing. It uses a dedicated edge-triggered test clock to provide scan and shift capability.

- Data clock/functional clock

- Scan clock/shift clock/test clock

Advantages:

- Dedicated clocks are used for capturing and shifting

- Results in no performance degradations

Disadvantages:

- It requires an additional shift clock

- Requires additional routing

LSSD SCAN Cell:

Scan replaces edge-triggered flip-flops with LSSD-compatible flip-flop cells that use standard LSSD master-slave test clocks for scan shift.

Advantages:

- Insert scan into latch-based design

- It is guaranteed to be a race-free condition

- Timing errors free design

Disadvantages:

- This technique requires additional routing for the additional clocks, which increases the routing complexity

- Difficult to set clock signals

SCAN Types:

Based on testing requirements, scans are divided into three types

Full scan

Partial scan

Partition scan

Full scan design:

In the full scan design all storage elements are converted into scan cells.

Advantages:

- Highly automated process

- High fault coverage

- Highly effective

Disadvantages:

- Area is overhead

- May be timing issues

Scan types:

Partial scans:

The partial-scan design only requires that a subset of storage elements be replaced with scan cells and connected into scan chains.

Advantages:

- Reduce the impact on the area.

- Reduce timing impact

Disadvantages:

- Low test coverage

Partition scan design:

Partition scan designs are divided into some partitions. Then these partitions are converted into scan chains.

Advantages:

- Improve test coverage and run time

- Less power consumption

- High test coverage

Disadvantages:

- Need to check the interconnections between two blocks.

Note :- Wepper cells may be required for checking interconnections between two blocks

SCAN design rules:

SCAN design rules also called SCAN DRC’S

In order to implement scan into a design, the design must comply with scan design rules. They limit the coverage of faults. Scan design rules labeled “avoid” must be fixed throughout shift and capture operations.

Major SCAN DRC’C:

- All internal clocks must be controllable from the top level

- Set and reset signals must be controllable from the top level

- Avoid the combinational feedbacks in the design

- If any latch in the design needs to convert into transparent latch

- Need not be converted the existing shift registers into scan flops

- Bypass memory logic if memory elements are in the design.

- Avoid the multicycle paths as much as possible

- There is no minimum coupling between clock and data

Scan DRC’s:

Scan-enabled signals must be buffered

Edge mixing

If the design of positive edge flop follows negative edge flop data mismatches are occurring, these data mismatches are avoided by two methods:

- Design must be negative edge flop follows the positive edge

- Use lockup latch between positive edge flop followed by negative edge flop

Scan balancing:

The scan vector length will depend on the longest design chain. The short vector must be x-filled at the design then results in wastage in tester memory. Overcome these two by using scan balancing. In scan balancing all scan chains must be unique lengths.

![]()

![]()

Scan compression:

Compression is a technique to reduce test time and cost and test memory of the design.

Compression is change the existing long scan chain in between scan in’s and scan out’s are broken up into small scan chains (or) increase scan chains without increase the scan channels

The length of scan chains decreases without increasing the scan ports.

Advantages:

- Reduce test cost

- Reduce test time

- May reduce test memory/data

Disadvantages:

- Area is over head

Scan compression architecture:

Scan compression main blocks:

- Decompressor

- Compressor

- Masking logic

Compression block diagram:

Decompressor:

The decompressor can be composed of a broadcast or spreader network with multiplexer logic for scan input pins.The decompressor is broadcasting input patterns of scan channels to the internal scan chain.The internal logic is multiplexer logic connected randomly. For each shift cycle, ATPG can choose load-mode and scan data values that steer these required values into the compressed scan chain.

Compressor:

The compressor compresses the number of scan chain data fed to scan channels using the XOR logic.

The compressor is XOR logic.

Masking logic:

If Design contains x-source logic which can drive the x-values to scan chain output.

For these X-source logic effects, the test coverage decreases. Need to mask X-source logic by using masking logic.

The mask logic is to mask some errors

- X-masking

- Aliasing effect

Masking logic

X-masking:

- If any internal scan chain logic can drive x-value to the xor logic. The xor logic always drives x-value at output of scan channel.

- By using x-masking we can mask that particular scan chain logic by using AND gate logic

Aliasing effect:

- The output of scan chain is giving the with fault and without fault same value then this effect is called aliasing effect.

- These effects can be eliminated by using masking AND logic.

Compression ratio:

The compression ratio gives how many scan channels and internal scan chains are in the design. The compression ratio is defined as the ratio of internal scan chains to scan channels.

Example:

No.of flops in design:64800,

No.of scan channels:6

Internal scan chains:360

The compression ratio is=360/6=60x

Note: the minimum compression ratio is “3” in the synopsis.

Compression with bypass:

In scan compression mode if there is any fault inside the decompressor or compressor logic the output indicates faulty circuit.By using compression bypass technique we can find if compressor or decompressor inside logic is correct or not.

Formulas:

Test time:

Test time is defined as the time taken to test the scan chain.

Test depends on the long scan chain in the design

Test cost:

The test cost is proposed to test time

Advanced compression technique:

Adaptive scan

- We can add source logic to adaptive scan. It reduces scan pin count.

- Selective scan channels

Scan compression with adaptive compression:

Adaptive compression:

Main blocks of advanced compression:

Serializer:

- It takes the data from the compressor in parallel and converts it to serially

Desterilizer:

- Data/input patterns from ATE are converted to parallel and fed to the decompressor as serial data.

Advantages:

- Selective scan channels

- Limited scan ports

- It can be used as a normal compression method. Based on the test control signals

ATPG:

ATPG stands for automatic test pattern generation.The procedure involves the generation of input patterns that ascertain presence or absence of faults at some location in a circuit.For detection of faults in design we generate patterns is called test pattern generation. If it is automated through a DFT tool is called automatic test pattern generation.

The test patterns generated are of three types:

- Exhaustive test pattern generation

- Random test pattern generation

- Determined test pattern generation

Exhaustive test pattern generation:

The exhaustive test pattern generation technique implies applying all input combinations and checking for functional correctness of the design. This test pattern generation not used in ATPG pattern generation because of complexity.

Disadvantages:

- It is not necessary to conduct exhaustive testing in ATPG

- It takes time to process

- If the design has n variables, it is possible to generate the 2^n patterns.

Random pattern generation

Random pattern generation uses the LFSR technique for generating random patterns.LFSR stands for linear feedback shift register.

LFSR:

Random pattern generation involves three steps

Generate a random pattern

Determine how many faults are detected by the random pattern

Continue above two steps till no new faults are detected by random pattern

Deterministic ATPG

Deterministic test pattern generation involves sensitization, propagation, and justification of a particular fault in the design.

Deterministic ATPG are two types:

- Path sensitization

- Symbolic difference

Symbolic differences:

- Symbolic difference is not used widely.

- Atpg by symbolic difference is based on the well-known Shannon expression.

- Mostly preferred path sensitization technique.

Path sensitization

The road to sensitization is mainly based on the sensitization/activation, propagation, and justification approach. It is generally used to test faults that are difficult to test. It works using a D-algorithm.

Fault sensitization/activation:

- Applying the opposite value to the network fault will activate the fault.

Propagation:

- Path is selected from the fault site to some primary output

- Fault effect can be observed for this detection.

Justification:

The fault justification is done output to input.to check which net the fault value is coming and check the output is faulty or fault free.

Fault models:

Fault models are things that go wrong in construction or design defects. A successful fault model should satisfy two criteria.It should reflect the behavior of the defect.It should be computationally efficient in fault simulation and test pattern generation.

There are different fault models

- Stuck at fault model

- Bridging the fault model

- Delay fault model

- IDDQ fault model

Stuck at fault model:

The stuck fault model is effecting the state of logic signals on a line in a logic circuit including primary inputs and primary outputs.

It appears to be stuck at a constant logic value. for fault models:

Stuck at – 0 fault

It drives the constant logic value of 0 at the net output.

Stuck at – 1fault

It drives the constant logic 1, which is at the net output.

Bridging fault model:

A short between two elements is commonly called a bridging fault

Two types of bridge fault models exist:

Wired AND bridging

The signal net formed by two shorted lines will always output logic “0”.

Wired-OR bridging

The signal net formed by two shorted lines will takes on logic “1” the output always logic “1” .

Delay fault model:

A delay fault causes excessive delay along a path such that the total propagation delay falls outside the specified limit

Delay fault models are of two types:

- Transition delay fault

A delay fault occurs when the time interval taken for a transition from the gate input to its output exceeds its specified range called transition delay.

- Path delay fault

A delay fault which considers the cumulative propagation delay along a signal path through the circuit under test

IDDQ fault model:

A “stuck short” fault model is also known as the IDDQ fault model.It will produce a conducting path between Vdd and Vss. It will create higher power consumption on the network in the design.

This technique of monitoring steady state power supply current to detect transistor stuck short fault is referred to as IDDQ testing.

Fault classes:

Faults are classified into fault classes based on how they were detected and why they could not be detected.

Fault classes are two types:

- Untestable

- Testable

Testable (TE)

Testable faults are all those faults that cannot be proven as untestable The testable faults are classified

Detected (DT)

Include all faults that the ATPG process identifies as detected

- Det-simulation

- Det-implification

Posdet (PD)

Include all faults the fault simulation identifies the as possible detected but not hard detected

- Posdet-testable(PT)

- Posdet-untestable(PU)

Atpg –untestable (AU)

- Atpg-untestable fault class includes all faults when the test generator cannot find a pattern to create a test.

- Testable becomes untestable due to ATPG limitations.

Undetected (UD)

The undetectable faults class includes undetected faults that cannot be proven untestable or atpg untestable

- Un-controllable (UC)

- Un-observable (UO)

Untestable (UT):

Untestable faults are faults for which no pattern can be used to detect or possible- detected them. It cannot cause functional failures so the tool can exclude The untestable faults are classified as:

- Unused fault (UU)

Including all faults on circuitry unconnected circuit observation points and floating primary outputs

- Tied fault (TI)

Including all faults in circuitry where the fault point is tied to a particular value.

- Blocked faults (BL)

Including all faults in circuitry for which tied logic blocks all paths on an observable point

- Redundant faults (RE)

Including all faults on circuitry the test generator considers untestable faults under any condition

SAF(Stuck at faults):

SAF means stuck at fault. The SAF is used to control the state of logic signals on a line in a logic circuit including primary inputs and primary outputs.

It appears to be stuck at a constant logic value. Stuck at fault model types:

Stuck at – 0 fault

It drives the constant logic 0 value at the net output.

Stuck at – 1fault

It drives the constant logic 1, – at the net output.

TDF(transition delay fault)

Transition on that particular node does not produce expected results at maximum operation speed is called TDF.

TDF are classified into two types

Slow to rise

Slow to fall

Slow to rise:

when the circuit is maximum frequency of operation it is unable to produce the transition from o to 1 at a particular node is called “slow to rise”

Slow to fall:

when the circuit is maximum frequency of operation it is unable to produce the transition from 1 to 0 at a particular node is called “slow to fall”

Transition delay test approach:

In TDF test approach we generate initialized vector and launch vector. Based on launch vector generation test categorized into two types

Launch on capture (LOC)

- Launch the launch value on the capture path

Disadvantages:

- The coverage in loc is lower because some controllability is missing in some cases.

Launch on shift (LOS)

Launch the launch value on the shift path

Launched on an extra shift (LOES)

It uses the pipelined architecture as a means of avoiding limitations on loss.

Test coverage & fault coverage

- Test coverage

Test coverage, which is a measure of test quality, is the percentage of faults detected among all testable faults.

- Fault coverage

Inputs & output of ATPG:

ATPG DRC rules:

In order to implement ATPG pattern generation into a design, must comply with a set of design rules. As they limit fault coverage. We need to avoid those rules.

ATPG DRC’S:

- Clock rule checking

- Data rule checking

- Scan rule checking

- Compression rule tracing

- Path delay rules

Atpg commands

![]()

![]()

Simulation:

Using simulation, complex waveforms can be built in a very short amount of time. In dft simulation we run the patterns on the design and validate the design which are correct or not based on waveforms which compare actual patterns i.e text bench patterns with simulation values and then give the response. When those match, the simulation passes, otherwise a design violation occurs.

Circuit simulation:

Imitative representation of circuit functioning by means of another alternative, a computer program, which is called simulation.

Fault simulation:

Simulating a circuit’s fault is called fault simulation

- Serial fault simulation

- Parallel fault simulation

Simulation types:

Simulation are two types

- Serial simulation

- Parallel simulation

Before forming simulation first check the design setup i.e chain test

Chain test:

In chain test to check all the scan chain is properly or not and to check the chain connectivity and nets connectivity.To verify the all the patterns are passing or not.

Serial simulations:

In serial simulation, the test pattern is given one by one and then analyzed for output response of the circuit.

Parallel simulations:

In parallel simulation the patterns applied parallel to scan chain and take the output response.It takes the response at only one clock response.

The simulation is based two approaches

- Zero delay simulation/no timing simulation

- With timing simulation

Zero delay simulation:

Zero delay simulation means without considering the timing to perform the simulation To check the circuit connectivity.Netlist Datapatterns

With timing simulations:

With timing simulation means with considering the timing on the path and then simulate patterns take the response

The timing simulation are done two methods

SDF min

It considers the minimum timing case i.e. appropriate timing case

SDF max

It considers the maximum timing case i.e. a poor timing case.

Why do we do simulation once we have done ATPG?

ATPG and simulation libraries are different. ATPG libraries would provide functionality of a cell but simulation libraries would consist of different timing checks. These timing checks should be done for each and every design before it passes to ATE for testing. ATE supports only simulation libraries because they are meant for real life scenarios. Whereas ATPG libraries can’t be used for timing checks.ATPG tool can’t understand libraries with timing checks.So, because of library mismatches, we do ATPG and simulation to ensure the design is fit for real time.

Simulation mismatches:

Test pattern verification based on device timing information to ensure patterns can be applied to real circuits. If there are any mismatches between the timing based simulation results and the expected results, the pattern must be corrected. If the test pattern is not corrected, the good devices will be rejected during the manufacturing process.

The most common simulation mismatches:

- Timing issues

- Library mismatches.

- Design rule violations

- Clock rule violation

- Bidirectional inputs/outputs

Simulation commands

![]()

JTAG

JTAG means joint test action group

It is IEEE-1149.1 standard integrated method of testing PCB interconnections that implemented by integrated circuits.

A single data register is connected between TDI and TDO at a time.

JTAG builds test points into chips and tests compatibility between them.

The main components of JTAG are as follows:

- TAP controller

- Data register

- Instruction register

Architecture of JTAG:

Test data registers:

- Instruction register

- Bypass register

- Boundary register

- Identification register*

- User-defined registers

Boundary scan cells:

Boundary scan is a method for testing the interconnections of PCB. Boundary scan is used for debugging in order to monitor integrated circuit pin stage. Using in-test and out-test, measure the voltage.

Operating mode:

- Normal mode

- Scan mode

- Capture mode

- Update mode

TAP controller

The TAP controller mainly works on 16 bit state machine

All state transitions of the tap controller is based on TMS and TCLK signals. The TAP controller connections

- TCLK

- TMS

- TDI

- TDO

Instruction register:

The instruction register allows instructions to be shifted into the design. The instruction register selects the test to be performed and instructs which data register is accessed.

Instructions for tests are classified into two types

- Mandatory instructions

- Optional instructions

Mandatory instructions:

These mandatory instructions are always used in JTAG. These instructions play the main role in interconnection checking

- ex-test

- Bypass

- Sample

- Preload

Optional instructions:

Mainly the optional instructions are used for some special testing purpose

These optional instructions are not required if we need to test the special tests then use these optional instructions.

The optional instructions

- Intest

- Runbist

- Highz

- Usercode

- Clamp

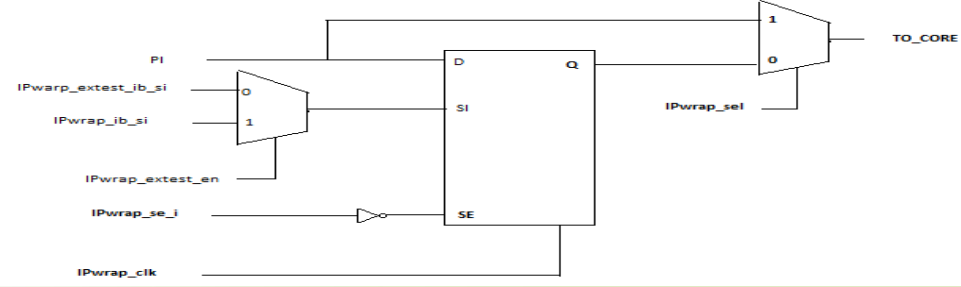

Wrapper cell:

The wrapper cell is P1500 standard integrated method of testing block level interconnections

The use of wrappers for blocks to get control at block level. There are two types of wrapper chains.

- Input wrapper chain

- Output wrapper chain

Input wrapper chain:

Output wrapper chain:

Operating modes:

- In bound test

It is a internal core test

- Out bound test

It is a toptest

Types of wrapper cells:

Wrapper chains consist of two types of wrapper cells:

- Dedicated wrapper cell

- Shared wrapper cell

Dedicated wrapper cell

A new cell is added into the design for test-purpose and it controls and observes the input and output.

Disadvantage:

- Area overhead

- Additional delay is added

Shared wrapper cell

To isolate input and output for testing purposes, an existing functional cell is reused.

Disadvantages:

- The tool requires additional analysis to identify flops. Identification can become complex based on the fan-out.

Important definitions:

Yield : The yield of a manufacturing process is defined as the percentage of acceptable parts among all parts fabricated.

DPPM: DPPM means defective parts per million In total manufacturing parts, few defective parts are present.

Rejection ratio:

Only a few parts escape testing. The ratio of escaped faults to tested parts

ICG:

ICG means internal clock gating. It is mainly used for power saving in design . It disables a particular portion of the design if it is not used at that time

Advantages:

Power saving technique

Disadvantages:

- Additional areas are overhead

- Extra effort is required

- You need to careful design of gate circuitry